Remote Visualization and Computational Steering for Large-Scale Distributed Systems

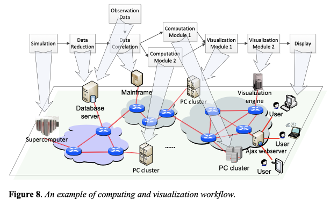

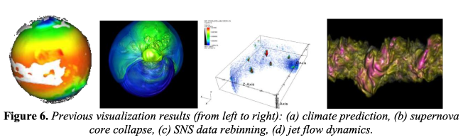

This project aims to develop a web-based remote visualization, computational steering, and statistic analysis system to support ultra-scale computational science research conducted by a group of geographically distributed collaborative scientists for either an ongoing streaming simulation or archived datasets in the grid environment. Unlike the conventional “launch-and-leave” batch computations, this structure enables: (i) continuous monitoring of variables/results of an ongoing remote simulation/processing using Paraview visualization tools, (ii) interactive specification of chosen computational parameters to steer the simulation/visualization for future timesteps, and (iii) statistical analysis with graph plot and annotation support for produced datasets. Such distributed applications usually involve nationally or globally deployed heterogeneous resources including various supercomputers, workstations, network infrastructures, and rendering engines, which are highly unpredictable in terms of available capacities.

Enhancing Accessibility: A Natural Human-Computer Interaction System Using Hand Gestures and Voice for Disabled Populations

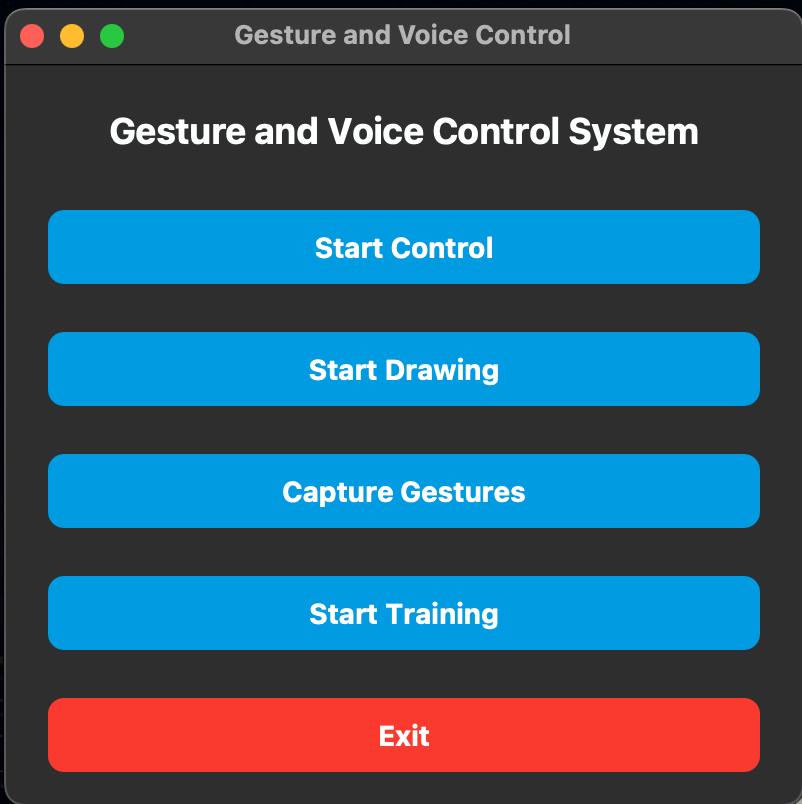

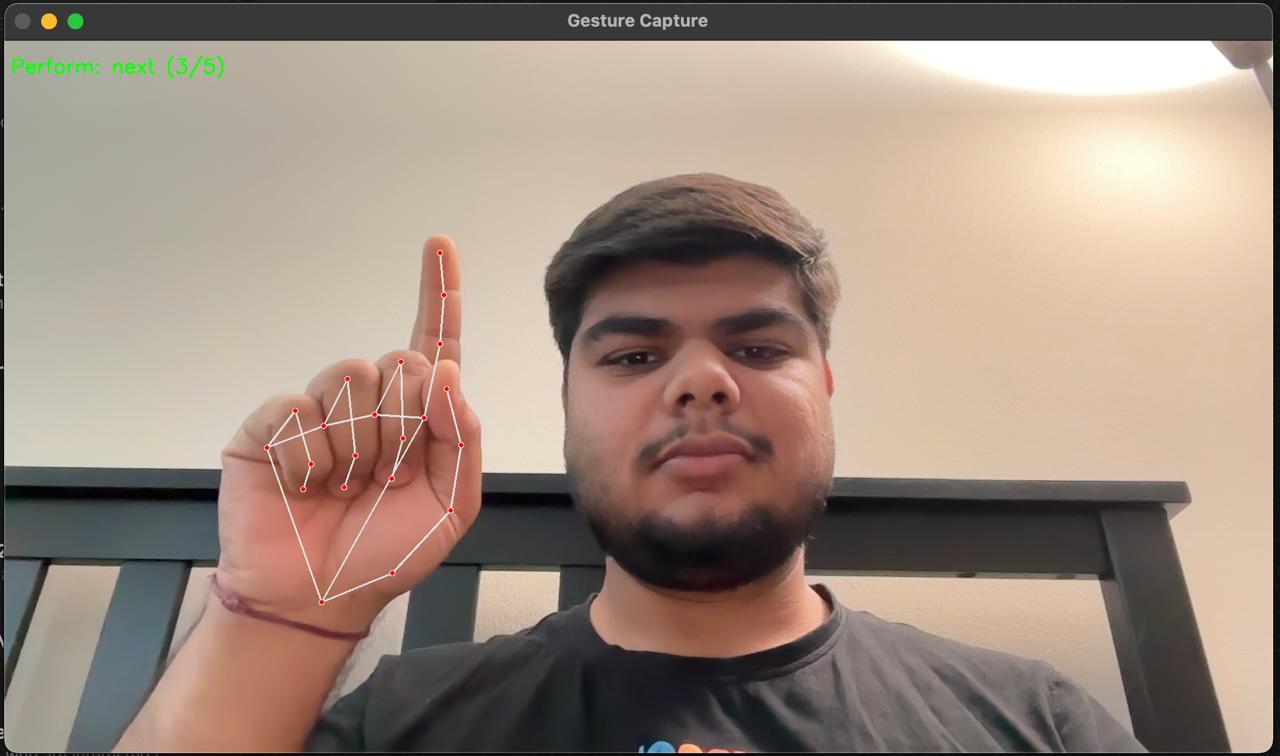

Around 26% of Americans live with some form of disability. These individuals can typically be categorized into four primary groups: visual impairments, auditory impairments, motor disabilities, and cognitive disabilities. Interacting with digital devices and multimedia content is very challenging. This project presents an innovative application that enables control of multimedia content through voice commands and static hand gestures, offering a transformative solution for individuals with disabilities. The application provides an intuitive, natural method for interacting with slides, images, videos, and audio, bypassing traditional input devices like keyboards and mice. It enhances accessibility and user experience by accommodating users with mobility impairments or low vision. A key feature is the virtual writing capability, allowing users to draw or erase in mid-air and project the writing and drawing onto the screen, providing greater flexibility. The software leverages advanced technologies such as MediaPipe for gesture recognition, SpeechRecognition for voice command processing, and PyAutoGUI for automation, making it suitable for various environments, including classrooms and conferences. Additionally, users can customize hand gestures for specific commands, with newly captured gestures used to retrain the model.

Identification of Cyanobacteria for Harmful Algal Blooms Research Using the YOLO Framework

This a collaborative project with Dr. Meiyin Wu from NJ Center for Water Science and Technology and Dr. Weitian Wang from School of Computing. Cyanobacteria, an ancient type of photosynthetic microbe, inhabit most fresh and marine water on Earth. The rapid growth of cyanobacteria can lead to Harmful Algal Blooms (HABs), posing major threats to water quality and aquatic ecosystems. Rapid and accurate identification of cyanobacteria is essential for population monitoring and mitigation efforts, especially when cyanobacteria produce toxins, threatening the health of wildlife and humans. However, the diverse shapes and appearances of cyanobacteria render manual identification time-consuming and error-prone. In this study, we make multiple novel contributions to the field of microscopic cyanobacterial identification using computer vision algorithms. We utilize the YOLOv5 algorithm, known for its speed and accuracy, and address limited dataset size and image heterogeneity. To combat overfitting and avoid unrealistic model performance values, we evaluate detection performance on common microscope artifacts (detritus and water bubbles), incorporate “background images”, which contain unrelated microorganisms into the dataset, and utilize image augmentation conservatively. Finally, hyperparameter tuning was used with a genetic algorithm to optimize a specified fitness function.

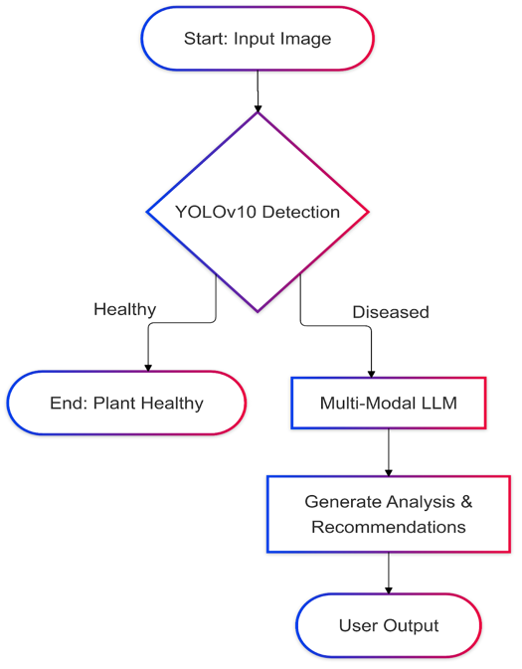

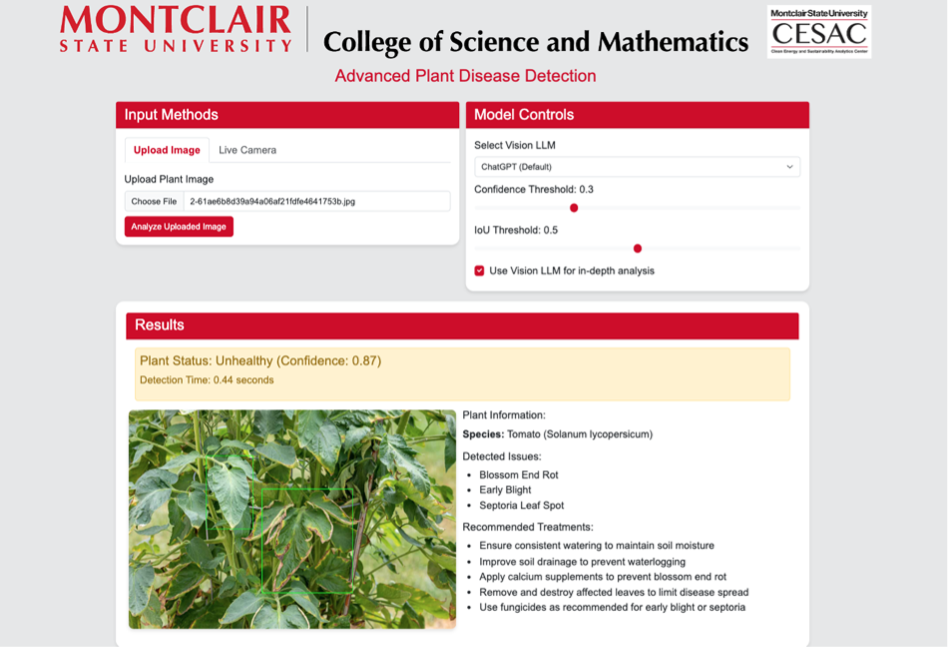

Novel Plant Disease Detection Using a Hybrid Approach of YOLOv10 and Multi-Modal LLM

This is a collaborative project with Dr. Pankaj Lal from Clean Energy and Sustainability analytics Center. Plant diseases significantly impact global food security, causing up to 40% of annual crop yield losses. This project introduces a novel hybrid approach for plant disease detection using YOLOv10 integrated with multi-modal large language models (LLMs). A binary classification task (healthy vs. diseased) was performed on the FieldPlant dataset of approximately 6,000 images, achieving a mean Average Precision (mAP50) of 0.85. The system leverages YOLO's filtering capabilities for real-time efficiency and multi-modal LLMs to identify specific diseases and recommend treatments. Future research will incorporate temporal analysis for disease progression prediction and treatment monitoring.